One of my Friday morning rituals is to sip my coffee and look up my h index on Web of Science (for my biomedical peeps, that's our equivalent of PubMed). For those of you who may not know what I am talking about, your h-index is the number of papers (N) cited at least N times and it is supposed to provide a metric for comparing the productivity and impact of researchers within a field. It is also supposed to integrate both the number of papers and the "impact" of your papers. The idea is that having more papers will only help you if they are also cited well. As with any metric that purports to quantify scientists, it is highly controversial. (Does anyone else see the irony that we are obsessed with quantifying every aspect of nature but ourselves?) Regardless of its weaknesses, I have to admit I have recently become a little obsessed with my h-index.

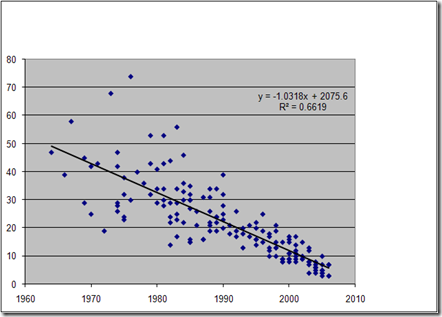

I'm not sure I can tell you when or why my obsession began. One day, I looked up my h-index (which Web of Science does so easily for you) and then looked up the h-index of people whose league I am not in but desperately wish I was (henceforth referred to by their official name: The League of Super-Ecologists). At one point, when I was kicked by my promotion committee for not doing something "needed for tenure" that actually had nothing to do with getting tenure here or at any other university, I "showed" my university by wasting an inordinate amount of time developing an h-index graph for the League of Super Ecologists. (Since this was part of my mental discussion with myself about whether or not to try to leave Confused U, this was actually a necessary step for me to convince myself I had a shot in hell on the job market). However, in order to understand how I ranked comparative to the League of Super Ecologists, I had to correct for time since Ph.D (obviously more time out, the greater the potential for a higher h-index). For those of you who are curious, here it is (x-axis: year Ph.D awarded, y-axis h-index)

At this point, I should be clear that this is not exactly a random list. This is a plot of people whose work I respect and have some general name recognition in the biz. I collected data on 162 ecologists before I stopped being mad at my university. (I was really really pissed). I think General Disarray was a little worried about me during this time of my life (the words "unhealthy" and "obsessed" may have been used). And it is easy to become unhealthily fixated on these metrics as DrugMonkey pointed out a few weeks ago. In a job where criticism of one's performance abounds (I present as my evidence: proposal reviews, manuscript reviews, promotion and tenure reviews, the poor demoralized post-doc on the job hunt), the h-index can seem like it provides positive feedback. Every time the h-index clicks upwards, every time your citation count increases, it can seem like it is positive validation of your existence. Oh, it's a seductive and alluring siren call, which can end with your nerves wrecked on some scotch-and-rocks.

But my obsessed exercise did give me some interesting bits of information. First, I'll say I didn't do as badly as I thought when compared to members of the League. General Disarray thinks I undervalue myself (I can neither confirm nor deny). The data supporting that he might potentially possibly be right was important for my future planning. Since I do not count General Disarray as being objective, having data gave me confidence that perhaps I might not do that badly on the job market. Second, at least for the list created in my mind, there is a shockingly good relationship between time since Ph.D and the h-index. I mean, aside from some heteroscadicity evidenced by the increasing variance with academic age, many ecologists would kill for such a nice looking relationship from their field studies. I haven't decided how to interpret the graph, since it is from my imaginary League of Super Ecologists (not sure that selection criteria would fly in a Methods section, for example). I suspect that if one could really take a "random sample" of ecologists the graph would look more like a triangle, with dots filling on below the "edge" that appears in my graph, but that the low variance for the young people would remain and variance would increase substantially with age.

So, if I'm doing better than I thought I was, you may be wondering why I am still obsessed with my h-index. It's not because I'm unhappy with my number or desperately want more people to cite me. Truth is, I am a shockingly small number of citations away from becoming a teen-h'er and watching my h-index teeter on the edge of a new number for months has been slowly driving my INSANE. Like watching a golf ball perched precariously on the edge of the cup but refusing to fall in. Or fingers on a blackboard. Or waiting for a loved one to finally get off the plane and come through airport security. Or being a teenager who desperately just wants to be an adult. Auuugh. (Yes, I do have patience issues). Is this the week when it will finally teeter over??? NO. Well, crap. Maybe next week.

11 comments:

...why not take a random sample?

it would be interesting to see if the h-index is normally distributed in general...

mmm the possibilities... mmmm stats...mmm

The problem I ran into is I couldn't figure out what the pool that one would make the random draw from actually is! All authors that have published in certain journals in the past year? Would you then need to randomly choose journals you would pull names from? Is the better pool all ecologists with faculty positions during the past 5 years? Then you miss post-docs and non-academic researchers... and how do you get that list? That's when I decided I needed to get back to work on things I could get published! I agree with you, though, it would be really fascinating!!!

Very interesting indeed. Curiously my h-index is pretty much where your line would predict it to be, despite the fact I'm very much not an ecologist. (Or in a Super League of any kind.) I guess that's just an illustration of differences between fields.

Whereas I'm substantially below your line - I've speculated about this on my blog today, a great way not to grade...

Does ESA keep a membership list that is categorized by job type? You could get a random sample from something like that.

Very interesting. All but one of my publications have come out in the last year, so it will be neat to see how my H-index changes in the next year or two as people start to site my recent manuscripts.

What about some kind of correction for placement in author list? Such as any authorship other than first/asterisk shared second/last is given less weight? The 27 author Science paper usually doesn't have 27 equally contributing authors...

odyssey - I find the field differences pretty interesting. The first time I really thought about it was the discussion over at DrugMonkey about the size of their meetings. Ecology's big meeting is ~4-5k. Their meetings were closer to 30k. That's a big difference the number of people writing and citing papers! But I don't think there's been any systematic study of this.

Ecogeofemme - oh, that's a great idea. ESA does maintain a directory, though it does not breakout by job type. You might actually be able to do the "random ecologist" plot that way.

Anon--Some institutions have tenure rules that weight how much a paper "counts" based on the authorship position of the faculty member. However, as a faculty member part of what we should be doing is involving our students in our research and allowing them to have leadership roles on those projects. I have seen these "authorship position rules" create cultures where the young faculty (who otherwise have seemed like ethical people) run roughshod over their students and make unethical authorship demands. Of course, I've also seen authorship leaches where people have literally done nothing on a large number of large authorship papers and are deep in the line on all of them. I guess what I'm saying is that while I understand the frustration with large author-line papers, these rules for what papers count or do not count can have unintended consequences that harm the scientific development of both students and young faculty - especially in a field like ecology where we really HAVE to become more collaborative to answer the important questions.

for better, mostly worse, forced collaboration with super ecologists (at least for me personally) involves NO INPUT, NO MENTORING, NO LOGISTICAL HELP from "supers"... just a wham bam here you go ma'am, stick me last on the paper, bu-bye now.

The only thing most supers contribute is their name - they hog the last author slot. And it seems that the supers all cluster with the supers, and only until I have a super idea for a paper, that they deviate from their network of cronies to help little ol' me (non-super). So, supers exponentially raise their crony super stats through super collaborations while the rest of us underlings rummage for the scraps that fall. I work with someone who mooches his way onto every.freakin.paper somehow and I swear he wouldn't know what the papers were about if I explained them to him the Dr. Seuss way.

Plus, I can count on 2 hands, barely, the number of women "supers" in my field - depressing.

Hi, you know, seeing the differences between your plot and mine me think that there probably IS something real there - and that it wouldn't be totally daft to write a little paper, maybe for one of the ecological society newsletters e.g. British Ecological Society Bulletin, which takes short notes and so on. In the UK, h-index is being proposed as one of the new metrics for the assessment of research quality from 2012, which assessment will control the amount of government research-stream funding for whole departments for multiple year periods... it's already looked at for appraisals and so on. Would you be interested in collaborating on something like that? My email is mollimog at gmail dot com if you want to discuss it...

The inventor of the h-index recognized there would be this correlation and proposed the "m-index" to deal with it. m is just h divided by number of years since your first publication. Basically it's the slope of your h-index line over time; larger m means your citations are increasing faster.

I would expect m-index to be closer to having no correlation with years since Ph.D. Though it would still probably be slightly down-sloping, just because people with higher m-indexes are more likely to have lasted longer.

Post a Comment